Last time, we left the reader with a question under the heading “Assess the frequency of potential outcomes.” This post tries to adequately answer that question.

When I was sitting down in front of my book, I would count the words on a given line. But which line would I count?

In order to make my data collection process robust, I would count the words in a randomly generated line, as pictured here. A uniform random number generator picked a page number and a line number, and I manually rounded the random numbers. When I counted the words in that line, I added them to my spreadsheet. (For now you can ignore column D, as it was part of a later data exploration.)

It took me approximately one minute per line to repeat this process, which I repeated n = 30 times. The astute reader may recall from basic statistics that this will help us apply the Central Limit Theorem (CLT). [I landed at Charlotte, CLT, while I was working on this very project. The link between math and aviation tickled my fancy.] We will apply the CLT to our problem in this way: the average number of words per line is distributed normally. (I’ve glossed over some technical details, for the sake of moving the discussion forward, a statistical heresy of the greatest proportions.)

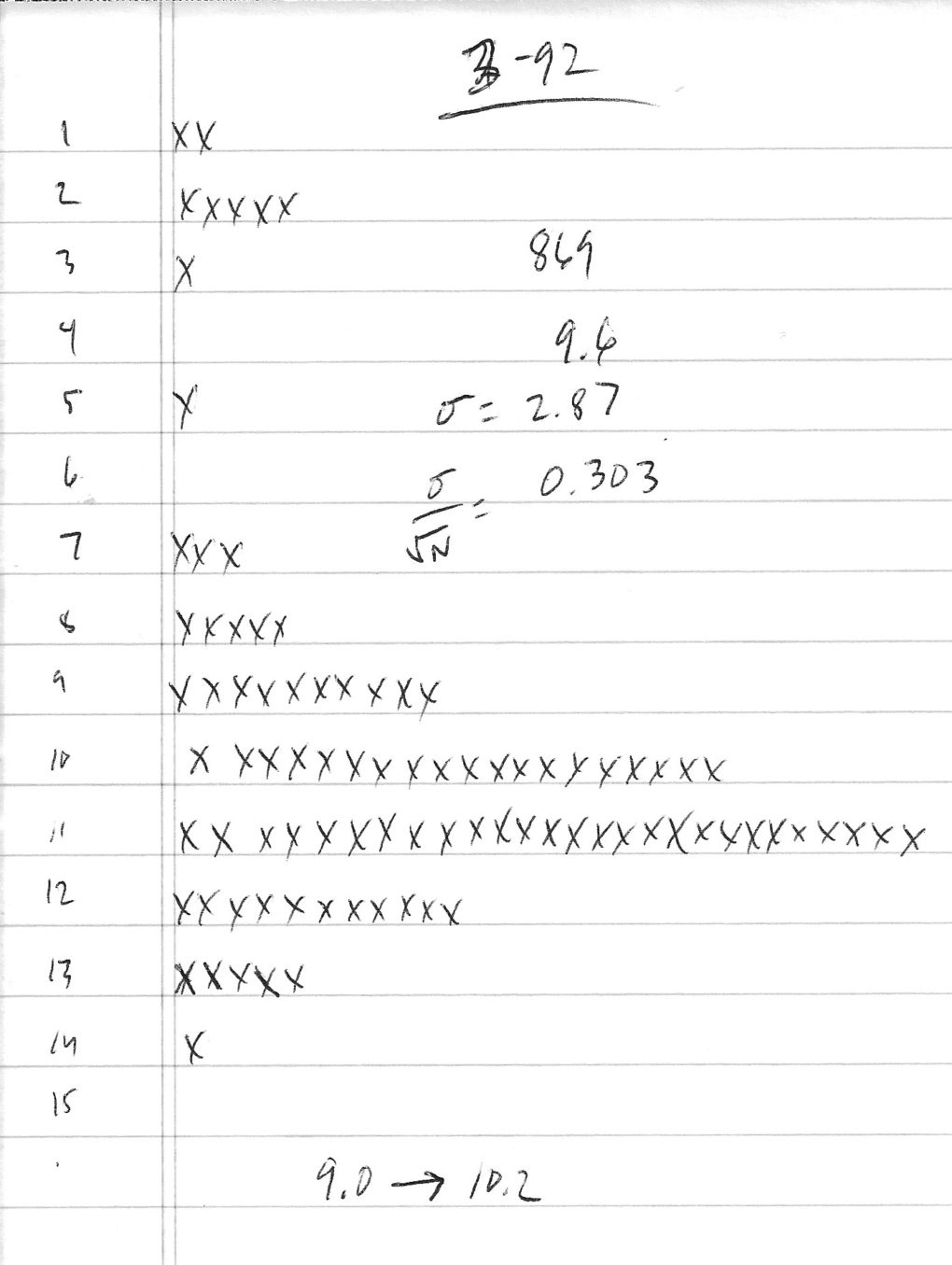

As I thought about the problem, I started doodling on a small pad of paper and created a stem and leaf plot with the data I had amassed. I actually drew four such plots–one for each set of n = 30 and one that combined all 90 points, as pictured here. The left column shows the frequency (e.g., 1 word per line in row 1, through 15 words per line in row 15). Only twice in all 90 lines that I examined did the line have only 1 word per line. Never did a line have 4 words per line, in my sample. This particular stem and leaf plot was visually appealing for a variety of reasons.

Among the many reasons I was so happy about the plot I created, the first was the simple fact that I created it on paper with a pen. No computational tools were necessary. This stem and leaf plot also doubles as a histogram, without any additional work. Even though it is “on its side,” I could easily see the bell curve in the majority of the data.

Just by looking at this plot, I can see that the central tendency of this data is between 10 and 11 words per line. The mean of the data is 9.6, because the outliers–those lines with very few words–skew the mean. This is a known weakness of the mean as a description of central tendency. The mode–the most frequently occurring value–is 11. The median is 10. The reader can find both the mode and median easily from this plot without any computation tools. These characteristics of the stem and leaf plot are all delightful, especially for those who don’t like the detailed calculations required.

Now we are ready to assess the frequency of potential outcomes. There is approximately one way to estimate the maximum number of words in this book: We assume the maximum number of words per line and the maximum number of lines per page. That is exactly how we arrived at the estimate of 128,592 in the previous post. We used a similar line of reasoning to estimate a lower bound, of which there are very few ways to do that.

What we did with the stem and leaf plot and the data above is estimate the mean number of words per line. If we use the mean number of words per line and the number of lines in the book, we end up with an estimate of 75,456 words. We could collect another sample of 30 lines, and we would expect the mean number of words per line to be “close” to this estimate. The CLT allows us to quantify “how close.”

If we use 9 words per line on average, our total words is approximately 70,000, and with ten words per line on average we estimate 80,000 words total. Most of the time (approximately 95% of the time), we expect our estimate to land somewhere near these numbers.

Conclusion

This brings us back to the original question and problem statement. We wanted to estimate the number of words in a book, and we used this example to illustrate that counting is hard for at least three reasons.

- It may be too hard to conduct an exhaustive count.

- There are many methods and choosing a method is not simple.

- Estimating the number of words in the book introduces error.

It is important to emphasize that in this example, we can ascertain the absolute truth–the number of words in this particular book has a “right answer” that does not change. However, our counting method is subject to the limitations of our humanity–we will make mistakes when we are trying to count all the words.

We examined several methods for estimating the number of words, some of which were crude, but we introduced simple heuristics (the 3Q) for refining these estimates. Finally, we improved our estimate drastically by collecting a minimal amount of hard data, and then the central limit theorem played a major part in helping us estimate the number of words in the book based on the data we collected.

This process also taught us several things about our ability to estimate in this domain–words on a page wasn’t something I had a lot of intuition about, and I probably had too much trust in my intuition.

Finally, I hope it showed how useful it is to use simple tools, tools we can employ with pen and paper.

For Further Contemplation

In future columns on this topic, I want to introduce two more counting problems.

- Counting bears in Northwest Florida

- Counting paper towels used per day in my house.

Did you know that the Florida Black Bear is its own subspecies of Black Bear? I was delighted to discover this and many more facts about the local omnivore during a trip down a google-search-rabbit hole. It is much more difficult to count Florida Black Bears, because I can’t be everywhere in NW Florida at once, and I can’t see all the bears at once. I can count the bears that pass a given geographic location, and I can randomize the locations at which I make my observations. By the time I count all the bears, one might be dead, and more might be born. This is counting problem all its own, and it may not have “a right answer” that’s true in the sense that counting words in a book is true.

Counting paper towels is yet a third kind of counting problem. Obviously, I use a different number of paper towels every day. This quantity is actually a random variable, unlike the first case. We are farther from true than the bear example or the counting words example.

Who knew counting could be so hard?

Note: while writing this post, the auto-correct function kept telling me that I spelled the word “than” wrong. This is another statistics problem…